Published January 9, 2026 by Brenda Álvarez, Senior Writer at neaToday.

“Artificial Intelligence (AI) is like having a personal assistant that wants to help you. It thinks the world of you. In fact, you are its world.”

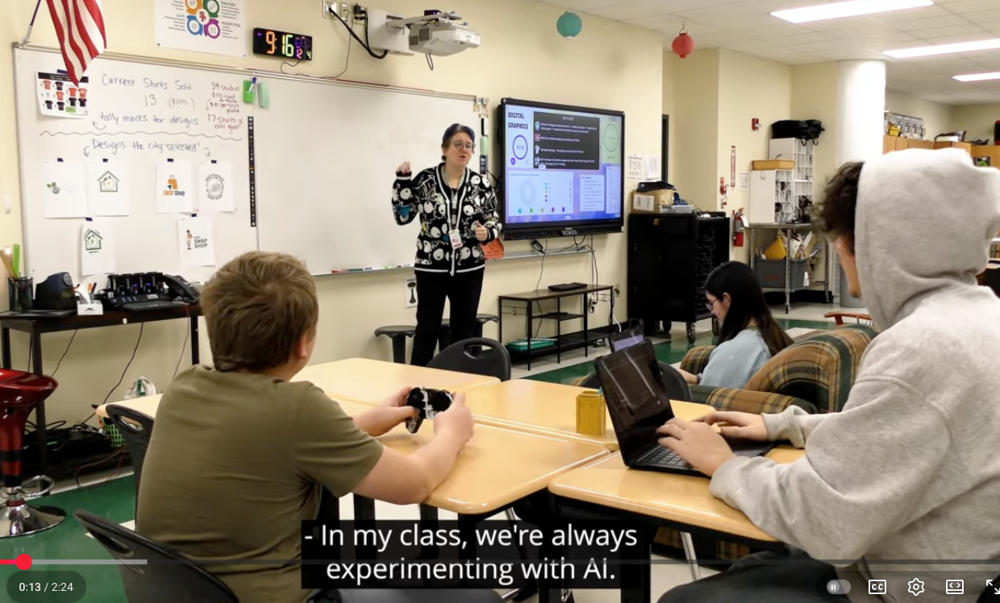

This is how computer science and media electives teacher Julie York explains artificial intelligence to her students at South Portland High School, in Maine. But, she continues, this is where it gets tricky:

So you have this biased assistant that thinks you can never be wrong or ask for something dangerous. Keep in mind this assistant only has a middle school education and lacks ethics, but is really fast at doing some things, but not all things. Sometimes, it will want to help you so much—just to make you happy—that it will lie to you.

STILL UNDER REVIEW

While proponents may promise that AI will revolutionize education, it’s still being defined by humans.

For some, AI is a trusted tool, a helper, a thought-partner, and a guide that amplifies creativity and saves time. For others, it’s a shortcut that risks stripping away what matters most in learning: The human connection.

So how are educators using AI, and what’s a boundary they would never cross? Four educators—from California, Maine, Michigan, and Utah— are testing limits, setting expectations, and underscoring that the most important factor in using AI is the human one.

Watch a video with Julie York and South Portland High School students.

AI IN HIGH SCHOOL

Back in Maine, York worked with a group of her students to learn scriptwriting and produce a short video. It was a Spanish soap opera (or telenovela) that, in true fashion, involved a crime scene. The script called for a corpse. York considered having a student play the part, but they didn’t have time for all that. So she asked AI to create it.

“All of the bodies were breathing,” York laughs. “I needed to use three models to just get a dead body horizontal with an ax to its head.”

After multiple AI prompts that generated various interpretations, York gave up. The final version was a barely-breathing body lying on the floor with an ax coming out of the person’s mouth like a flower.

“I was just like, you know what, we’re good. … This is the one we’re using. I’m walking away,” she says.

Turns out, AI can’t make dead bodies. The AI models York used—Veo, Sora, and Kling—have built-in safeguards to prevent misuse or harmful behavior. But AI would never have told you.

‘IT’S IMPOSSIBLE TO AVOID’

York says she uses AI a lot, and she’s been using it for a long time.

“AI has been a part of some [computer] programming for a long time,” she says, pointing to Bluetooth and facial recognition as examples. “But then all of a sudden, these large language models came out, and I started seeing [it] in all my software. It is impossible to avoid.”

AI is only getting better, York says, and compares it to the internet of yesteryear: “Using the internet meant your whole house couldn’t have a phone or you had to wait 20 minutes for a website to load, and God forbid it had graphics! If you think about it as a tool, the internet now is almost ubiquitous.”

She adds, “In five years, AI is going to look completely different, and … the babies being born today won’t know [anything different].”

York encourages educators to learn how to effectively use AI, model it for students, and talk about the pros and cons. Follow your district’s policy, if one exists, and don’t ignore the tools’ privacy policies.

“There are some AI programs that have really atrocious privacy settings,” she says. “Pay attention to where the data is going.”

Educators should not pretend AI doesn’t exist, York advises.

“You want your students to be successful … and be prepared to go on their own in the real world,” she adds. “And we can’t not talk about things because we think they’re bad. … Eventually, this is something they will have to face.”